SIAM Conference on Imaging Science (IS22)

Together with Leon Bungert and Daniel Tenbrinck we organized a three-part Minisymposium for the SIAM conference IS22 on Recent Advances on Stable Neural Networks.

Abstract

Modern machine learning methods, in particular deep learning approaches, have enjoyed unparalleled success in a variety of challenging application fields, like image recognition and medical image reconstruction. While previous research focused on achieving high accuracy and good generalization ability, the consensus has emerged that other properties like stability and robustness are of equal importance. This has especially been supported by instabilities of neural networks, revealed through adversarial attacks. These are intentionally and imperceptibly distorted images that fool the network and lead to misclassification.

In this two-part minisymposium we gather researchers from both mathematics and machine learning that have been driving the research in this field. We aim at sparking new collaborations in this vibrant intersection and offering a platform for scientific exchange. The covered topics include regularization methods for training, as well as the design of inherently stable network architectures.

Program

| Speaker | Title |

|---|---|

| Tim Roith | Regularize the Adversary: The Role of the Lipschitz Constant in Modern Machine Learning |

| Tom Goldstein | Think Deeper, Generalize Better: Solving Logical Reasoning Problems with Recurrent Thinking Systems |

| Leon Bungert | A New Perspective on Adversarial Training as Nonlocal Geometric Problem |

| Mark Sellke | A Universal Law of Robustness via Isoperimetry |

| Jan Stanczuk | Wasserstein GANS Work Because They Fail (to Approximate the Wasserstein Distance) |

| Matthias Hein | Certified Adversarial Robustness on Out-of-Distribution Data without Tears |

| Elena Celledoni | Structure Preserving ODE Formulations: Invertibility and Contractivity |

| Borjan Geshkovski | Control and Stability in Learning via Neural ODEs |

| Michael Unser | Functional Optimization of Free-Form Activations with Tight Lipschitz Constraint in Deep Neural Networks |

| Patricia Pauli | Neural Network Training with Matrix Inequality Constraints - Robustness via Lipschitz Bounds |

| Johannes Hertrich | Convolutional Proximal Neural Networks and Plug-and-Play Algorithms |

| Kevin Roth | Adversarial Robustness and Regularization of Deep Neural Networks |

The Role of the Lipschitz Constant

During this Minisymposium I gave an introductory talk concerning instabilities in machine learning and how Lipschitz methods can help to overcome these issues. You can find a video of the talk below.

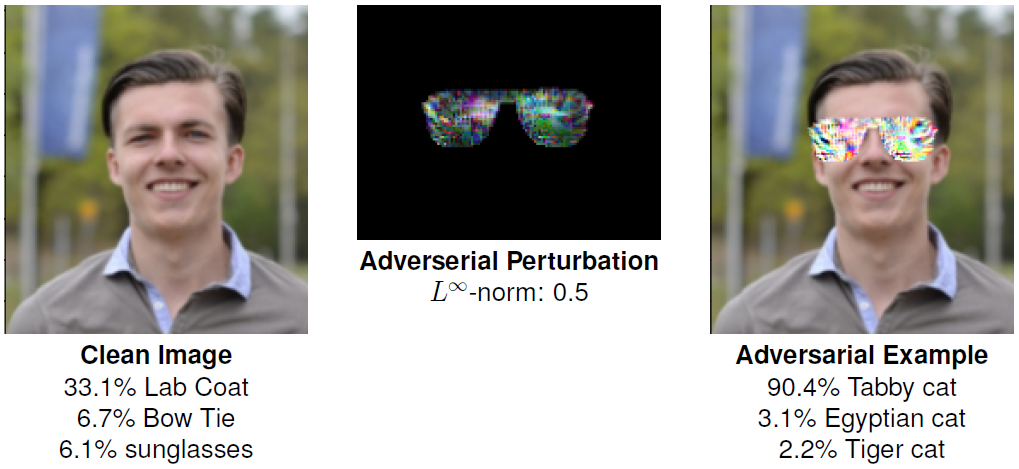

In preparation for this talk I had some fun with adversarial examples as can be observed in the following picture.

Here a ResNet50 trained on the ImageNet dataset is used. Since this dataset does not have a dedicated human class, the picture of myself is out-of-distribution and should therefore be classified with low confidence, which is not the case. However, using a few steps of a targeted signed gradient attack we can convince the network that I am a tabby cat.

The perturbation does not need to be added globally, we can also prescribe a certain shape of the perturbation, as can be seen here.